I had an opportunity to chat with Dr. Lewis about his research and Google. I did not record the interview, so this is not a standard “Q and A”.

The 5 questions I asked Dr. Lewis were broadly categorized into 3 groups: 2 questions about his research, 2 questions about working at Google, and 1 general question. I’ve done my best to paraphrase Dr. Lewis.

Measuring the Effects of Advertising

1. Getting more data can be both a blessing and a curse. Often, although one may add more of the “signal” we are interesting in measuring, we also add more “noise.” In your studies of online advertising, how does this “increasing” noise affect your ability to use traditional statistical tools, such as the “5% significance level?”

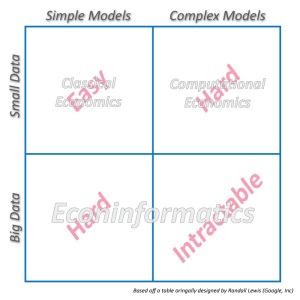

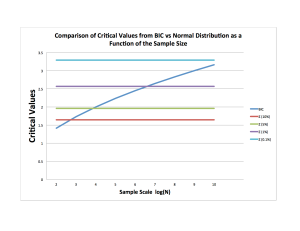

The challenge of online advertising is that the effect of advertising exists and is typically both economically large and statistically tiny. A model for a well run experiment of a profitable ad campaign may yield a partial R-squared value of about .000005 — what most economists would consider to be zero. Such a tiny R-squared implies that one needs a massive sample size (2+ million unique users) to acquire statistically significant estimates.

This is where being Yahoo or Google, firms whose ad platforms reach large numbers of users, have a clear advantage of scale. They can run massive experiments involving ten or hundreds of millions of users. But this leads to another, bigger problem: the Data Generating Process (DGP).

Collecting data is no longer very difficult or expensive for firms. Quantity is not a problem. But quality — how data is generated aka the DGP — remains a persistent problem.

This is captured best in the classic phrase “garbage in, garbage out.” There is no shortage of garbage data and the growth of garbage data is higher than that of quality data. Garbage data is easy to generate. Quality data is not. Quality data — data that is free of bias — requires randomization and meticulous care and maintenance of the DGP.

When searching for a tiny effect, like in Dr. Lewis’s online advertising research, understanding the DGP is paramount. Any bias that cannot be properly controlled in your model can devastate your estimates, especially in settings where both the treatment (advertising) and the outcome (purchases) are observed by both the researcher and any other systems (ad servers and other optimization algorithms). In these circumstances, worst case bias scenarios (i.e. users who are most likely to buy a product see ads for the product) are not uncommon.

Ideally, researchers want control over the entire DGP because this facilitates alignment between the DGP and the model. There is no room for a brute force approach when trying to find such a faint signal in so much noise. In the particular case of online advertising, the model must be perfectly aligned to fit the DGP or the estimates will be inconsistent.

A perfect model is fragile and inflexible. Dr. Lewis said that one should think of models in the online advertising world like particle accelerators. A particle accelerator is exactly designed to find a very specific signal from a carefully generated set of experimental data. Bias leaking into the DGP via misaligned systems is akin to an earthquake misaligning a particle accelerator. Both are too finely tuned to handle such disturbances — and the data will demonstrate the disturbances in both systems.

According to Dr. Lewis, one begins to appreciate the difficulty and challenge posed by “Big Data” problem like online advertising when one attempts to design a model that is 99% correctly aligned with the DGP. This a dauntingly difficult task. And even if one succeeds, the results can be hard to believe.

Like, for example, that online advertising in certain cases may have little effect on consumer behavior and may not be worth the money. Take, for example, the study by economists at eBay who found that biased analyses likely cost eBay well over $100M in ineffective advertising expenditures that were erroneously pitched as eBay’s most effective ad spending.

2. From your paper, it would appear folks in the advertising world do not share the obsession economists have with bias. For data folks out there unfamiliar with bias, why is it such a big problem and why can’t throwing more data in the mix solve the problem?

Selection bias is an ever-present problem for economists. Targeted advertising, for example, is an industry standard. However, measuring the success of an ad campaign is near impossible, no matter how much data you have, without randomization. And most online advertising firms are not randomly targeting consumers (that would defeat the “target” in “targeted” advertising). What we have then is an industry dependent on biased data; those who see ads are fundamentally different from those who do not.

Perhaps paying for targeted ads is a good strategy during an ad campaign. Perhaps the targeted ads are working. But unless data generated from targeted ad campaigns includes random assignment, then the data produced will, by construction, be plagued by selection bias. This, in turn, makes it impossible to do any sort of causal inference about the effectiveness of the ad campaign. And yet, that is exactly what many advertising firms tend to do — make unsubstantiated causal claims.

At Google

3. For economists a ‘big’ data set can be a few gigabytes in size. I’m assuming this is laughably small for someone at Google. What’s it like to do data analysis at Google and what tools/techniques do you use for handling and making sense of such truly massive amounts of data?

Dr. Lewis summed up working with “Big Data” at Google succinctly:

“Big Data in practice is just glorified computational accounting.”

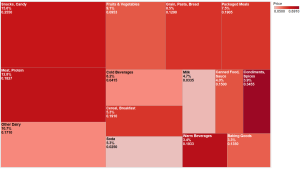

Data is generally collected for some basic business tabulation to settle accounts. For example, large advertising companies collect data on clicks and ad impressions primarily for billing purposes.

Additional “Big Data” applications have been built on this primary business case for revenue optimization. “Big Data” is now a race to leverage the new granularity of such accounting data. Naturally, these new applications can influence what data is recorded thanks to the efficiency of computational tools.

“Big Data” is a storage challenge. Day to day, if any data is needed, it is never downloaded raw. It would be too big. Enormous data sets are instead refined before extraction into much smaller sets using software like SQL or Hadoop.

As for doing analysis, there are loads of tools to choose from but it is best to learn analytical software that facilitates sharing and collaboration. Don’t be the only person using R when your team is using Python.

4. (Matt’s question) What skills and educational background does it take to get a job doing data analysis at google?

In a somewhat order-of-importance, Dr. Lewis suggested the following programming skills:

Engineering — C/C++, Java, Python, Go, SQL.

Quantitative Analysts (Data Scientists) — Python (SciPy/NumPy/Pandas), Linux/Bash/Core Utilities expertise, SQL, R (open-source), Matlab/Julia/Octave, Stata/SAS/SPSS (and other less broadly used languages).

General

5. What are your feelings about the mainstream explosion of the term “Big Data”?

Dr. Lewis is glad the term exists and that people are thinking about it, but he wants people to get real about it in the world of causal inference (“econinformatics”). For describing data (summary stats, etc.), “Big Data” has been great. For finding pockets of statistically informative and clean causality in data, “Big Data” has also been great. But “Big Data” in practice is more about arbitrarily precise correlations that tell us little about what most decision-makers care about: the causal effects.